Board Games Buddy

Part 1: Artificialis Intelligentia et Circenses

The notion of artificial intelligence is a concept whose traces can be found up to more than 1000 years before our era, but which experienced an especially lightning rise from the 19th century with the work of many mathematicians and engineers who began to formalize its principles. Then, a convergence of innovative ideas at the dawn of the 20th century established a close connection with this field as soon as it appeared that the brain was made up of neurons processing electrical impulses. The Claude Shannon’s information theory and signal processing, along with Alan Turing’s computation theory, clearly suggested the possibility of realizing one of the great humanity’s dreams: the creation of a thinking machine. In this context, it is therefore not surprising that the development of artificial intelligence has gone hand in hand with the rise of computing.

The algorithmic and symbolic path

Interestingly, however, the early attempts to create AI were completely against the path chosen by evolution. All animals, from the simplest to the most elaborate, have neural networks that allow them to make all kinds of complex decisions for their livelihood. This is true even for those we consider the most “stupid” on the pretext that they don’t seem to have a shred of imagination. However, this does not prevent them from generating a vast directory of sophisticated behaviours we would have a hard time understanding how they work.

But human “noble and superior” thought is strongly associated with its capacities for abstraction, reasoning and symbolic manipulation. Now it turns out that the manipulation of symbols is comparable to a calculation and the latter can easily be automated on a computer. This is how the first AIs appeared, in the form of programs manipulating abstract symbols supposed to represent the problems to be solved. Intelligence then came less from the machine itself than from its designers, since it was the latter who incorporated all their knowledge and methods of reasoning by creating a long series of automatisms and links between symbols that were intrinsically meaningless.

AI is therefore a field where we are less concerned with defining what is the essence of intelligence than with building a process that behaves as such. But anyway, it appeared very early that many real-life problems could not be easily solved with algorithms, that is, with procedures handling a handful of symbols or information through a few rigid rules. It was then evident that creating true intelligence was a vastly underestimated challenge.

Despite all that, there are domains which, by their deterministic and limited nature, are eminently suitable to the algorithmic and symbolic approach. A good part of board games, especially pure strategy games [1]A pure strategy game is a game where each player has access to the whole information relative to all the game events, like in chess or checkers. , are particularly good candidates. As these are often disciplines that require a lot of intelligence and practice for a human being, but as their rules and objectives are perfectly clear, they have always been chosen as a yardstick for recording progress in AI. In fact, they allow us to get away from all kinds of problems for which the rules are implicit, the objectives totally vague and where the performances can largely depend on circumstances; that is, like almost the majority of situations that humans and the rest of the animal kingdom have to face on a daily basis.

Chess has long been considered as a benchmark for confronting human intelligence with machines, with Shannon’s first paper on this theme back to 1949. And even if the first attempts showed poor performance, the algorithms that were used in this game and many others have been improved gradually over decades of research on the subject. The most used approach in these algorithms is without a doubt something akin to “if I play this move, then my opponent could play this or that move, which would allow me to etc.”. In short, it involves combinatorial evaluation of a maximum of possibilities within a limited time. But if humans cannot match AI on the field of computation speed and in game moves exploration, it also turns out that speed is not everything.

To understand this problem, it is good to start from two games at opposite ends in terms of combinatorial complexity, namely tic-tac-toe and go. If we look at the number of different configurations that the game board can take, tic-tac-toe boils down to about a thousand possibilities. Since a modern desktop computer can easily assess a hundred thousand positions per second without breaking a sweat, the game can be folded in a hundredth of a second. Whatever the move you make, the machine may have anticipated it when it worked out its previous move. Having a full view of all the possibilities, the computer will be able to play perfectly and will not make any single strategic mistakes.

But if we look at the game of go and its $10^{170}$ different board configurations, the picture is quite different. This value is so disproportionate that to represent it you have to take this picture: for each atom of the universe (approximately $10^{80}$) imagine that it contains another universe also including $10^{80}$ atoms. And there you are still a little bit below the target. It goes without saying that dealing with this gargantuan number of contingencies is beyond reach of any computer if it simply tries to list them all.

With “only” $10^{47}$ combinations, chess is much more affordable, but still requires a lot of skill and heuristics to overcome. And the key to doing this, since the tree of possibilities is too large to be fully traversed, is being able to intelligently assess the state of any intermediate position during a game. Imagine having an oracle which, for each configuration of the board, returns a number between 0 and 1 to indicate what your chances are of winning the game by playing perfectly afterwards. It goes without saying than any confrontation becomes much easier, because we only need to test a limited set of moves in advance to select the one that maximizes the result delivered by the oracle.

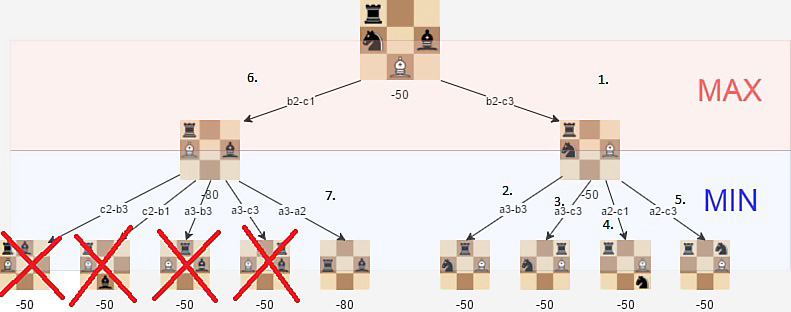

The image below, taken from an article on freecodecamp, illustrates the principle on a artificial example where White has to define its next move. The idea is to choose the branch that returns the highest score, knowing that the opponent will try to minimize it.

Of course, such an oracle does not exist, but it is possible to come close by building what is called an evaluation function. A basic example for chess might be to simply assign a score for each piece (the queen gets the highest score, a pawn the smallest) and sum them up for those still on the chessboard. This function is obviously much more complex in a modern chess program, but you get the idea.

It is with this algorithm, a lot of optimization, an overboosted computer and a carefully crafted evaluation function that DeepBlue was able to beat the best chess player in the world, Garry Kasparov , in 1997. Of course, it is no longer feasible to play perfectly, because of the calculation horizon getting blurred when trying to anticipate the moves in advance. But this problem is offset by the intuition and the expertise that designers can bring to their evaluation function.

Unfortunately, this is a double-edged sword, as the playing quality of such an approach relies entirely on the promise of ultimately incorporating all the know-how of an experienced player into that function. This turns out to be a step which is not only sensitive, but which must also be repeated for each new problem because of its specificity. Moreover, it is simply not always possible to produce a correct evaluation function, and it is for this reason that the game of go has long been dominated by the superiority of human intuition.

The connectionist path

Like many other challenges, go cannot be solved easily with a purely algorithmic approach. And to be fair, we also have to highlight that many problems are resolved very well without having recourse to any elaborate conceptualization mechanism. For example, the animal kingdom very quickly acquired a powerful visual system, capable of processing complex images while being accessible to the simplest animals. The key to this success lies in the neuronal architecture developed by the animal kingdom and which, even with a small handful of neurons, is able to associate an appropriate behaviour in response to a given stimulus (visual or otherwise).

This kind of system does not require any rigorous reasoning process able to find the optimal solution to a problem. Rather, it is about determining as quickly as possible a good behavioural response to a situation that can often be lethal. And as sophisticated as the human brains are, it is especially on top of such mechanisms that the elaborate layers of thinking of our neocortices have evolved. All animals have this intuitive capacity shaped by millions of years of evolution, difficult to translate in terms of algorithms, and which allow them to progress easily in a world that is certainly hostile and in perpetual change.

The equivalent of this paradigm in the computer field is what is called machine learning. The idea then is not to build custom algorithms to solve some given problems, but rather to build algorithms that digest data and examples to discover through experience what the best answer to a given situation is. This discipline has given rise to numerous techniques in the field of mathematics and statistics, but it is above all the methods based on deep neural networks which have recently made it possible to achieve the most spectacular advances.

One of the earliest successes of the connectionist approach applied to board games dates back to 1992 and the work of Gerald Tesauro on the game of Backgammon. The idea was to use a small neural network to determine the statistics of the next move to play, and to train the program on confrontations where the machine faces itself. This program then rose to the rank of the best players in the world, a first worldwide for this kind of competition.

Many games require above all a very good reading of the sequence of events rather than advanced reasoning skills. These are games where the primary difficulty does not come from a combinatorial explosion problem as in the previous paragraph, but rather from the fact that the players do not have access to all the information necessary to reason perfectly. The game of poker is a perfect example, and it was only in 2017 that real breakthroughs allowed computers to rise to the level of the best players in the world. It has been achieved by using deep learning techniques in order to better estimate the actions to be taken in response to those chosen by the other players.

The list of actions a poker player has to consider is minimal, limited to folding, calling or raising. But the goal is certainly not about trying to anticipate dozens of moves in advance regarding what the opponents are going to do, it is more about understanding what the strength of their hand is, given their own strategy and the choices they made. The purpose is very different, because the art of poker is to deceive your enemy by bluffing and, conversely, to find out what others are hiding from you.

In the context of machine learning, the subdiscipline aimed at teaching an agent to make the best decisions given the results of its interaction with an environment is called reinforcement learning. And just like with algorithms, it is a discipline that extends far beyond the recreational domain of board games.

A formidable combination?

The strict algorithmic approach is powerful for reasoning about the best strategy for solving a problem, but also suffers from a pitfall resulting from its purely abstract style. Unlike the human species whose high-level thinking evolved on top of its intuitive abilities to understand and analyse the real world, an algorithm “reasons” only in terms of synthetic symbols defined by its designer. And unfortunately, many situations do not easily fit within this quite limiting simplification. The tremendous difficulty of writing an algorithm that accomplishes a visual recognition task or offers a good evaluation function to process a go board configuration is just one among many examples.

The connectionist approach, inspired by the brain functions of the animal kingdom, currently allows the creation of extremely powerful AI for a single given task, sometimes even exceeding human skills. But beyond the elaborate calculation they perform, they are unable of the slightest faculty of reasoning. What they do is more akin to unravelling a complex sensory stimulus and transforming it into some useful value.

Nevertheless, an interesting direction initially proposed by DeepMind through its AI AlphaGo, consists in blending the qualities of both the algorithmic and connectionist approaches. With this concept, they have achieved the feat of creating an AI that ultimately proves to be superior to the best go player in the world. Their idea still consists in traversing a combinatorial exploration tree, but by guiding the research through the intuition generated by a neural network. This eliminates the need for the famous and delicate evaluation function, because it is here the neural network, trained on thousands of self-played game episodes, which is responsible for determining whether a game situation is advantageous or not. It is this same network that saves the algorithm from having to estimate myriads of possible moves, most of them poor, but instead allows it to focus its attention on the most relevant ones.

The path introduced by DeepMind, although artificial and limited to a single game at a time, tries to come closer to human intelligence. Sure, the quest for a real general AI, capable of reasoning in all circumstances and of facing new problems promises to be long. But it is undeniable that the field of games offers an extremely favourable framework for its emergence.

Conclusion

Board games, and games in general, is not just for entertainment. Because, by affording a large set of various and more or less complex rules, they ultimately provide an ideal environment where humans and AI can confront and measure themselves against each other. It thus allows scientific research to face ever greater challenges to improve the performance of their systems.

In this context, the goal of this project is mainly to take advantage of the latest advances in the field to offer AIs that can play all kinds of board games. The idea is to see if it is possible to build some kind of strong partner to confront, while studying the details of the best algorithms available. A particular emphasis is placed on so-called general methods, in the sense that they can be applied to an entire class of games at once, without the need to write code specific to any game (except to code the rules of course).

In the next chapter, we’ll come back to the high-level goals of this project, as well as to the architecture and design choices selected to achieve them.

[1]: A pure strategy game is a game where each player has access to the whole information relative to all the game events, like in chess or checkers.